New Publications: Article in ACM Computing Surveys, Paper at CHI'23, and Paper at VR'23

We are happy to announce three major publications to start off 2023!

In our recently published article in the ACM Computing Surveys journal, we categorize metrics for cognitive workload, support researchers in selecting suitable measurement modalities, and conclude with gaps for future research.

A Survey on Measuring Cognitive Workload in Human-Computer Interaction

Thomas Kosch*¹, Jakob Karolus*², Johannes Zagermann*³, Harald Reiterer³, Albrecht Schmidt⁴, and Paweł W. Woźniak⁵

¹HU Berlin; ²German Research Center for Artificial Intelligence; ³University of Konstanz; ⁴LMU Munich; ⁵Chalmers University of Technology

*First three authors contributed equally

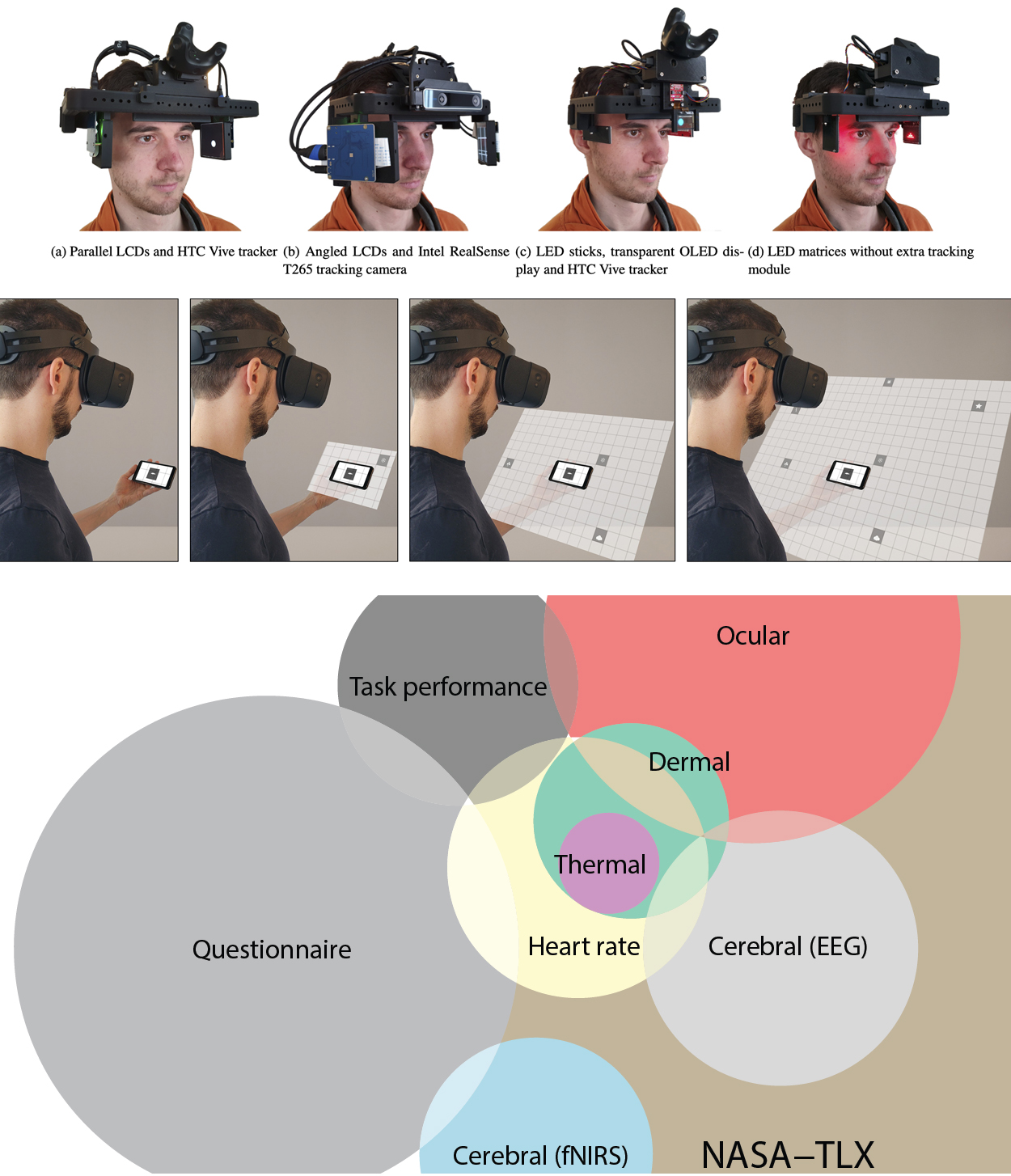

The ever-increasing number of computing devices around us results in more and more systems competing for our attention, making cognitive workload a crucial factor for the user experience of human-computer interfaces. Research in Human-Computer Interaction (HCI) has used various metrics to determine users’ mental demands. However, there needs to be a systematic way to choose an appropriate and effective measure for cognitive workload in experimental setups, posing a challenge to their reproducibility. We present a literature survey of past and current metrics for cognitive workload used throughout HCI literature to address this challenge. By initially exploring what cognitive workload resembles in the HCI context, we derive a categorization supporting researchers and practitioners in selecting cognitive workload metrics for system design and evaluation. We conclude with three following research gaps: (1) defining and interpreting cognitive workload in HCI, (2) the hidden cost of the NASA-TLX, and (3) HCI research as a catalyst for workload-aware systems, highlighting that HCI research has to deepen and conceptualize the understanding of cognitive workload in the context of interactive computing systems.

Feel free to read the article and browse our paper library.

_ _ _ _ _

At this year's CHI'23 in Hamburg, we will present the results of a user study, where we studied the effects and influences of the size of virtual extensions for smartphones on spatial memory.

Sebastian Hubenschmid, Johannes Zagermann, Daniel Leicht, Harald Reiterer, and Tiare Feuchtner

Smartphones conveniently place large information spaces in the palms of our hands. While research has shown that larger screens positively affect spatial memory, workload, and user experience, smartphones remain fairly compact for the sake of device ergonomics and portability. Thus, we investigate the use of hybrid user interfaces to virtually increase the available display size by complementing the smartphone with an augmented reality head-worn display. We thereby combine the benefits of familiar touch interaction with the near-infinite visual display space afforded by augmented reality. To better understand the potential of virtually-extended displays and the possible issues of splitting the user's visual attention between two screens (real and virtual), we conducted a within-subjects experiment with 24 participants completing navigation tasks using different virtually-augmented display sizes. Our findings reveal that a desktop monitor size represents a "sweet spot" for extending smartphones with augmented reality, informing the design of hybrid user interfaces.

You can watch the accompanying video here and our work is also available as open source project on GitHub!

_ _ _ _ _

We will present our paper “MoPeDT” at this year’s IEEE VR 2023 in Shanghai (remotely).

MoPeDT: A Modular Head-Mounted Display Toolkit to Conduct Peripheral Vision Research

Matthias Albrecht, Lorenz Assländer, Harald Reiterer, and Stephan Streuber

Peripheral vision plays a significant role in human perception and orientation. However, its relevance for human-computer interaction, especially head-mounted displays, has not been fully explored yet. In the past, a few specialized appliances were developed to display visual cues in the periphery, each designed for a single specific use case only. A multi-purpose headset to exclusively augment peripheral vision did not exist yet. We introduce MoPeDT: Modular Peripheral Display Toolkit, a freely available, flexible, reconfigurable, and extendable headset to conduct peripheral vision research. MoPeDT can be built with a 3D printer and off-the-shelf components. It features multiple spatially configurable near-eye display modules and full 3D tracking inside and outside the lab. With our system, researchers and designers may easily develop and prototype novel peripheral vision interaction and visualization techniques. We demonstrate the versatility of our headset with several possible applications for spatial awareness, balance, interaction, feedback, and notifications. We conducted a small study to evaluate the usability of the system. We found that participants were largely not irritated by the peripheral cues, but the headset's comfort could be further improved. We also evaluated our system based on established heuristics for human-computer interaction toolkits to show how MoPeDT adapts to changing requirements, lowers the entry barrier for peripheral vision research, and facilitates expressive power in the combination of modular building blocks.

Find the accompanying video on YouTube and access the code on GitHub.